Introduction

The goal of the Two!Ears project is to develop an intelligent, active

computational model of auditory perception and experience in a multi-modal

context. In order to do so, the system must be able to recognise acoustic

sources and optical objects, and achieve perceptual organisation of sound in the

same manner as human listeners do. Bregman has referred to the latter phenomenon

as ASA [Bregman1990], and to reproduce this ability in a machine system a

number of factors must be considered:

- ASA involves diverse sources of knowledge, including both primitive (innate)

grouping heuristics and schema-driven (learned) grouping principles.

- Solving the ASA problem requires the close interaction of top-down and

bottom-up processes through feedback loops.

- Auditory processing is flexible, adaptive, opportunistic and

context-dependent.

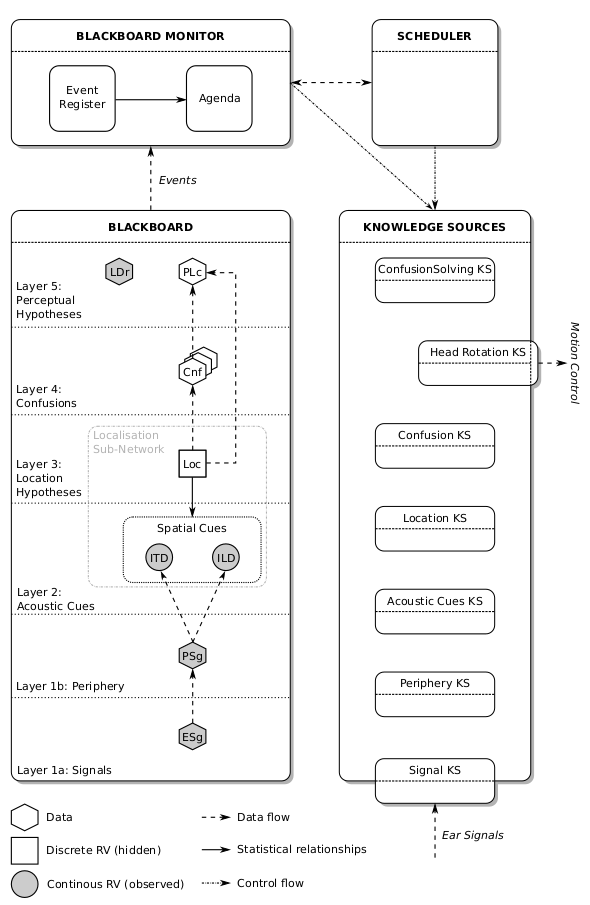

The characteristics of ASA are well-matched to those of blackboard

problem-solving architectures. A blackboard system consists of a group of

independent experts (knowledge sources) that

communicate by reading and writing data on a globally-accessible data structure

(blackboard). The blackboard is typically

divided into layers, corresponding to data, hypotheses and partial solutions at

different levels of abstraction. Given the contents of the blackboard, each

knowledge source indicates the actions that it would like to perform; these

actions are then coordinated by a scheduler, which determines the order in which

actions will be carried out.

Blackboard systems were introduced by [Erman1980] as an architecture for speech

understanding, in their Hearsay-II system. In the 1990s, a number of authors

described blackboard-based systems for machine hearing [Cooke1993],

[Lesser1995], [Ellis1996], [Godsmark1999]. All of these systems were in most

respects conventional blackboard architectures, in which the knowledge sources

employed rule-based heuristics. In contrast, the Two!Ears architecture aims to

exploit recent developments in machine learning, by combining the flexibility of

a blackboard architecture with powerful learning algorithms afforded by

probabilistic graphical models.

The general structure of the Blackboard system is shown in

Fig. 42. It consists of different knowledge

sources that can put data on and receive data from the blackboard. In addition,

special knowledge sources can perceive data from outside (ear signals) or

send data to the outside (turn the head). The management of the different

processes going on in the blackboard is achieved by monitoring and scheduling

which is performed by two independent modules.

Read on for further details on the blackboard architecture, details on the knowledge sources, or start with use the blackboard system.

| [Bregman1990] | Bregman, A. S. (1990), Auditory scene analysis: The perceptual organization

of sound, The MIT Press, Cambridge, MA, USA. |

| [Erman1980] | Erman, L. D., Hayes-Roth, F., Lesser, V. R., and Reddy, D. R. (1980), “The

Hearsay-II speech understanding system: integrating knowledge to resolve

uncertainty,” Computing Surveys 12(2), pp. 213–253. |

| [Cooke1993] | Cooke, M., Brown, G. J., Crawford, M., and Green, P. (1993), “Computational

auditory scene analysis: listening to several things at once,” Endeavour

17(4), pp. 186–190. |

| [Lesser1995] | Lesser, V. R., Nawab, S. H., and Klassner, F. I. (1995), “IPUS: An

architecture for the integrated processing and understanding of signals,”

Artificial Intelligence 77, pp. 129–171. |

| [Ellis1996] | Ellis, D. P. W. (1996), “Prediction-driven computational auditory scene

analysis,” PhD thesis, Massachusetts Institute of Technology. |